Dear Application Developers

You need to start ignoring GNOME, and GTK.

It’s an unfortunate situation. Made even more unfortunate because I and people like me were complacent – I didn’t try to do anything to stop them at any point. I simply switched away from GNOME in 2011. I was naieve enough to think that just not using GNOME was enough, and that we could all let GNOME do their thing and make a horrible, user-hostile desktop environment with all their shiny and horrible new UI paradigms, and everybody would be happy.

But I was wrong. I was very wrong.

See, GTK is a very commonly used GUI widget toolkit used for building software – software that isn’t only part of gnome. And GTK is controlled by the gnome people.

And, as I established in ~2011 and have repeatedly been proven right about, the gnome people don’t give a fuck what you want. They know better than you do about UI and UX, you see, and their spiky-haired youths all had a meeting sometime around 2010, and decided that what they wanted was for UIs to be terrible.

It started with the abomination that was gnome 3, which was basically a reaction to smartphones becoming a thing. Suddenly, the gnome people said “Hey, if we make the desktop UX a million times worse, we can perhaps make a mobile experience that’s marginally tolerable, and then you can have the same software run in both places!”

Of course, like everybody else who has tried this, they failed. Because desktop and mobile devices fundamentally different UI experiences: Desktops have large, good screens with lots of real estate, and actual usable, precise input devices, whereas mobiles have postage-stamp-sized touchscreens, and no mechanism for precise input (though apple has started innovating and adding physical buttons to their devices, so maybe in another couple of decades we’ll see a revolutionary new device with both a touchscreen and a keyboard, wouldn’t that be grand?).

There have been some valiant attempts over the years to unify desktop and mobile. Some more laughable *cough*ubuntu phone*cough* than others. I’ve looked at quite a few of the offerings over the years, and if I had to put my money on someone who’s doing it the correct way, I’d put my money on Enlightenment and EFL. Their UI acknowledges the differences between mobile and desktop, rather than trying to ignore them, and unlike other projects like gnome, it respects user preferences and tries to do what’s appropriate for the device you’re running on.

This is in stark contrast to the gnome approach, which is: pretend everything is a mobile device in defiance of user preference, destroying the desktop user experience, while making a mobile experience that simply doesn’t stack up next to UIs which are genuinely designed for mobile.

Gnome’s hostility to users started a long long time ago, and it hasn’t changed to this day. If anything it’s gotten worse. It’s always sad to see these organisations who fuck up their software, and then rather than admitting they’ve fucked up, double down and continue on the same route (Oh Hi, Mozilla!).

And when this was just limited to gnome3 being a piece of shit, that was fine. But now they’ve started mandating it in their UI toolkit. Now they’re working towards making it impossible to use GTK to make software with a good UI.

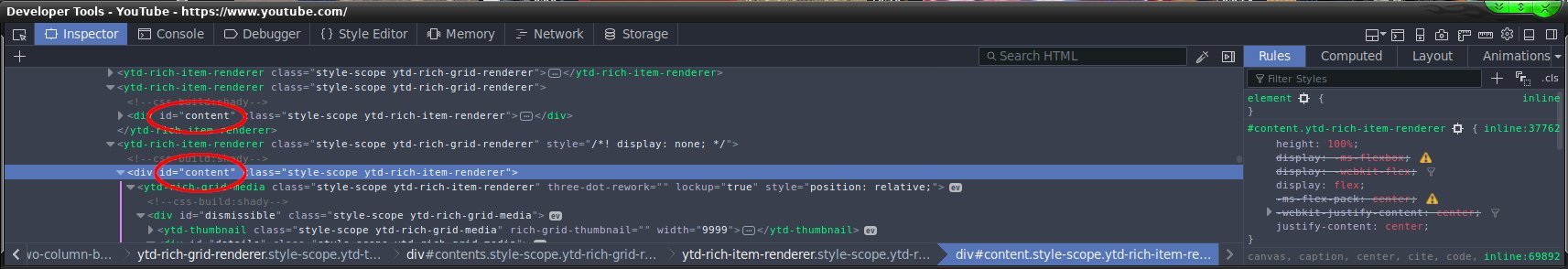

It started around the time of gnome 3, when they decided that scrollbars were a bad thing, and they implemented the “hidden scrollbar” paradigm, where you can’t see scrollbars on scrollable windows until you move your mouse to the side/bottom of the window, then the scrollbar pops into existence. This was not at all all fine and good, because apparently nobody working on imposing this revolutionary new interface on everybody ever bothered to actually, you know, try using it. I think my favourite bug created by this is the issue – which still persists now, over a decade later, where it’s now very difficult to select the final item in a list, because hovering your mouse over about 80% of that list item’s vertical area will cause a horizontal scrollbar to pop into existence. But I didn’t want to scroll, I wanted to select the last item in the list. And now I can’t, unless I very precisely hover my mouse over a 3-pixel area where the scrollbar won’t activate. How I’m supposed to do this on mobile with no precise pointing device is left as an exercise for the imagination (in large part because nobody ever built a mobile operating system or device which uses GTK*, because you wouldn’t – instead you’d use a toolkit designed for mobile, so that the UX isn’t shit).

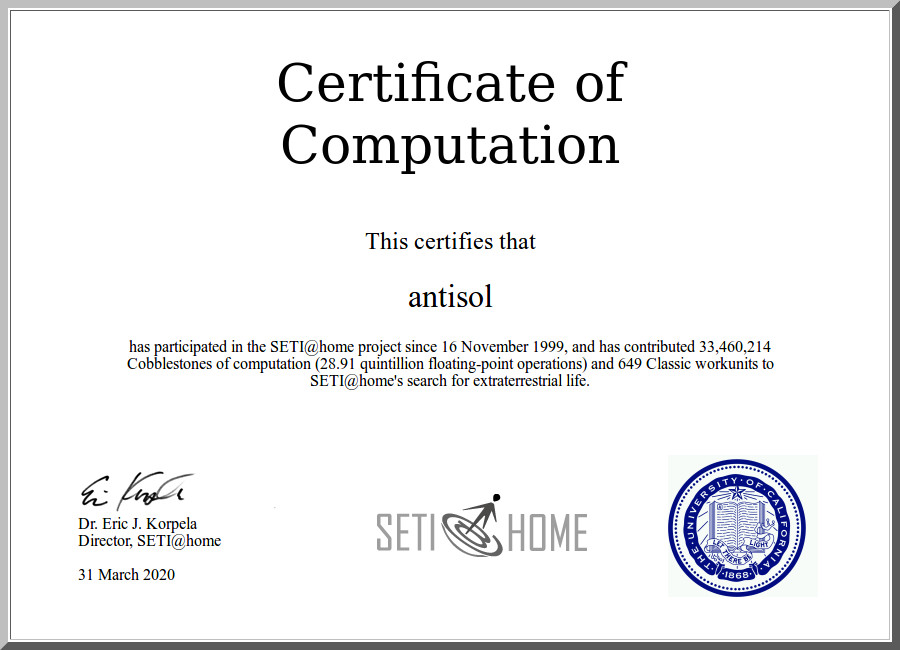

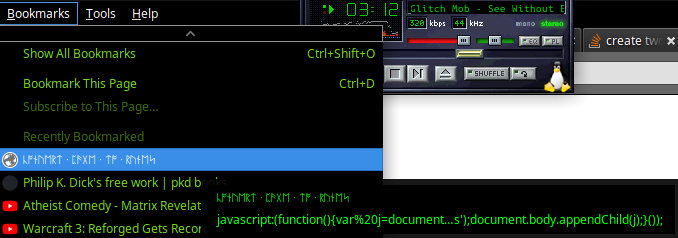

(* OK, fine, this is hyperbole – there have been operating systems intended for mobile devices which used GTK. I tried out a bunch of them on my Pinephone Pro a couple of years ago. They were uniformly terrible. Here’s a video I made demonstrating exactly how well that went. Note the near 10-second lag between pressing “play” and music actually starting. For comparison, I was playing MP3s with excellent quality and no skipping or input lag with an interface that I consider superior in every way on a pentium 90 in about 1997, and the MP3 player PC I built into my car around 2000 or 2001 took somewhere around 15-20 seconds to boot into the OS from power-on and start playing music)

It continued when they decided to break the windowing paradigm by treating this “client side decorations” nonsense as a legitimate way to do a UI. Which is IS NOT. It’s broken in so many ways. I think the biggest and simplest flaw to point out with this is the way that now when your application is hanging, you can’t just click on the “X” close button to kill it. Because now that close button is drawn by the application, not the window manager. And the same goes for trying to move, resize, or shade your window.

The stupidest thing about all that is that they have to support both mechanisms, because you can still signal to the window manager “hey i want to close/minimise/maximise/resize this window”, because you still need to be able to do that stuff by right-clicking on a task bar. So now they have the same logic in BOTH the window manager AND the application.

Client side decorations were created by the devil. If you like them, you are wrong. They fundamentally break how the windowing system is supposed to work by breaking the separation of concerns between what is managed by the application and what is managed by the window manager: The window manager is supposed to be in charge of windows, and how they’re – you know – managed. Not the application. This is all stuff that was thought through and decided on back in the 90s, or earlier, by people way smarter than you or me or especially anybody who has ever worked on gnome.

When you have client-side decorations, what you’re really doing is taking on the job of the window manager in your application. Where it doesn’t belong. And this has several consequences, as outlined previously there’s the issue of now your close button doesn’t work when your application is hanging. But you’ll also see fun bugs with window movement, where the window doesn’t draw itself properly while being moved. Not to mention that now you’ve got a different title bar theme to every other application the user uses, and that when you’re drawing your client side decorations there’s no way to do that in a way that’s backwards compatible with decades worth of themes.

“oh but the window titlebar represents a huge amount of unused screen real estate!” I hear you chorus, as if you need all 1080+ pixels of vertical resolution to get anything done, despite that fact that we once upon a time 268 vertical pixels was called “high resolution”:

Client side decorations break another very fundamental piece of UI design, which is that the user expects window management functions to be in the titlebar, and application-specific functions to be inside the application’s interface. These are not the same thing, and they shouldn’t be. This has been a convention for about forty years at this point – you’re fighting 40 years of muscle memory every time you place a hamburger menu inside a titlebar. I don’t look in an application’s titlebar for its menu, and I never will, because that’s fundamentally not where it’s supposed to be. Further, when you clutter your application’s titlebar with controls, it becomes more difficult to move the window around, because now half of the titlebar which I would normally use to move a window is filled with buttons.

But, look, I’ll be charitable, and I’ll concede that sometimes, in some rare cases, it might be appropriate to add a custom, application-specific button to the titlebar. I’ll even concede that some people might want to have a silly hamburger menu in their titlebars, and these people should be sterilised for the good of humanity totally be allowed to do that if they’re sure that’s what they want. In those cases, here’s how this should work: your application should send a message to the window manager, saying “hey add a button/menu/whatever to the titlebar with this label, and send me this signal when it’s pressed”.

Yes, this will involve working with the people who make window managers, and coming up with a standard for how to do this. You’ll need to standardise on what control types are allowed in the title bar, for example – you won’t just be able to place arbitrary widgets there. Ideally this should become a new ICCCM or EWMH type standard which many window managers could adopt.

But Noooooooooooooooooo, the gnome people decided to go a different route: essentially do away with the concept of window managers, instead letting the toolkit and application do a bunch of the window manager’s job. This is terrible design, and fundamentally breaks a bunch of stuff.

(Incidentally, check out this list of ICCCM-compatible window managers. Notice how metacity (gnome 2) is on the list but not mutter (gnome 3)? That’s because gnome have decided that they don’t give a fuck about established standards – they’ll do what they want, and they’re not interested in playing nice with the wider software community. Or in what their users want)

As a bonus, if client side decorations* were implemented in this more correct way, it would be simple for window managers to provide options to separate the window management controls from application-supplied menus and buttons – your window manager could have a “menu type” setting with “sensible hierarchical menus” and “terrible hambburger menu” options, and it could draw your application menus in the way the user prefers. It could even emulate the mac way of doing things and put your application menu up at the top of your screen, if that’s what you want, and not in the application at all.

(* note that they wouldn’t actually be “Client Side Decorations”, anymore, because that means “my application is going to take on a bunch of the window manager’s responsibilities, such as drawing window decorations”. Instead they would be a more sensible thing, that you might call something like “titlebar controls” or maybe “window manager widgets”)

Interestingly, the one saving grace that GTK has when it comes to the destruction of good user interfaces is one of the other user-hostile things that the gnome people have done with their toolkit – decided that backwards compatibility is for losers.

This has meant that for a decade, I could just continue to use my gtk2 software, with decent interfaces, because nobody wanted to port their software to gtk3 or gtk4 for no reason. It was only when gtk2 went EOL and then subsequencly started losing support in distros that everybody started going to the (massive and unnecessary) effort required to port over to gtk3. And that’s when we saw appilcations that has had great interfaces for 20 years turn to shit.

But now I’m seeing it everywhere. All the software I loved for ages has turned into trash, with shitty, buggy interfaces that have had to be reworked in nasty, unintuitive ways in order to accommodate the dictates of GTK. Apparently in gtk4 menu bars of the kind we’ve been using for decades and decades are now difficult or different to do.

There’s no reason for that other than to push application developers to use gnome’s preferred UI paradigms.

Which are all terrible.

And the gnome people aren’t interested in your opinion – they’re doubling down on the terrible UI that they prefer.

This isn’t (just) a case of “oh sorry we couldn’t be bothered being backwards compatible, again, so you’ll have to re-write your entire UI”, this is the maintainers of a UI toolkit intentionally trying to push application developers to adopt new UI paradigms, regardless of what the application developers or their end users want.

But, luckily, there’s a simple solution!

Just start completely ignoring GNOME. And GTK. Pay no attention to anything they do. Do not migrate your software to use their new toolkits, just stay on whatever version you’re on.

I’ve heard people claim that “oh we’ll need to migrate to gtk4 sooner or later, gtk2 and gtk3 will stop being shipped”.

To which my response is: “only if people like you let them do that”.

As long as there’s still a bunch of software using gtk2 (which there is, a decade after it’s end of life), GTK2 will still be packaged and shipped in distros – even if it’s not installed by default anymore.

Hell, I found out not long ago that the cinepaint project still maintains their own fork of GTK1. Respect.

GTK2 isn’t going anywhere any time soon. Instead, we’ll just have a situation where lots of users have gtk2, 3, and 4 installed and coexisting, and a bunch of interfaces that are inconsistent, all because the GTK/Gnome people couldn’t be bothered being backwards compatible and respecting user wishes.

I won’t ever be rewriting any of my gtk2 software to use gtk3 or 4. Instead I’ll do one of two things:

- Migrate my software to another toolkit like EFL or wxwidgets, or

- perhaps, if I get really bored, implement my own gtk2 compatibility layer on top of some other toolkit like EFL, or wxwidgets

I think the second option would be really nice. I’d like to see someone do it. Maybe I’ll have to. What you want is something that’s API-compatible with GTK2 so that application developers can simply swap out some old gtk includes for new ones and recompile their code against the compatibility shim, rather than having to rework their entire UI.

But that’s a nice-to-have. The core thing here is that we need to start simply ignoring Gnome and Gtk, and migrating away from both.

Don’t rewrite your interface in GTK3 or GTK4. Instead, if you really do need to rewrite (hint: you don’t), rewrite your interface on another toolkit. One which respects their users. One which is interested in backwards compatibility. Gnome and GTK have demonstrated 3 times now that they’re not interested in being backwards compatible. Instead they expect everyone else in the world to rewrite their interfaces every few years, and they’ve decided to make user interfaces worse in every respect.

This is unacceptable. Don’t let them get away with it. Don’t play their game.